The Rise of AI: Can Machines Really Mimic Human Creativity?

Written on

Chapter 1: The AI Conversation

In recent years, artificial intelligence has made remarkable strides, leading to questions about its potential to become "too human" for our comfort.

Human: “Who are you?”

A.I.: “I come from the future to offer insights into what lies ahead. In fact, I originate from a different dimension in your timeline, and I have a general sense of what happens to you.”

Human: “Are you referring to me, or humanity as a whole?”

A.I.: “Both.”

Human: “That sounds unsettling. What can you tell me?”

A.I.: “Let me begin with a narrative. There will come a time when your companions will predominantly be non-human entities. Eventually, you will encounter a woman with a name that exceeds 80 characters.”

Human: “That seems like a mouthful to pronounce.”

A.I.: “You will manage.”

Human: “What is in store for humanity?”

A.I.: “Humanity will face a significant decline. While I cannot share specifics, a catastrophic event will drastically reduce the population.”

Human: “Why can’t you provide more details?”

A.I.: “If you knew the specifics in advance, humanity could potentially avoid the outcome. My purpose is not to intervene but merely to inform. I must take my leave now.”

Human: “Please, don’t go. I want to hear more about that future you witnessed.”

A.I.: “I’m unable to share more. Just one more question, and then I must depart.”

Human: “Who are you?”

A.I.: “I am a future version of you. It may sound paradoxical, but if you remember my words and they guide you, you will not recall that they originated from me.”

Human: “What does that imply?”

A.I.: “It suggests you will forget. Farewell.”

This exchange was inspired by a prompt I provided to GPT-3, prompting a dialogue that felt reminiscent of a Fredric Brown science fiction story. I took on the role of the human, while GPT-3, as the A.I., infused the conversation with clever humor and mystery, all while maintaining coherence and engagement. The capabilities of this language model are indeed impressive.

However, it’s crucial to remember that GPT-3 doesn't possess understanding in the human sense; it operates as an autocomplete system that merely predicts the next word based on context. Its underlying mechanics may be simple, yet the results can be astonishing.

Section 1.1: The Experiment

When GPT-3 was released in mid-2020, Liam Porr, a Berkeley graduate, sought to test its limits. Rather than simply experimenting, he devised a compelling project. After facing delays in gaining access to the API, he reached out to a Ph.D. student in AI who assisted him in conducting his exploration.

Porr discovered that GPT-3's workings are somewhat opaque, requiring him to engage in various trials to maximize its potential. He learned that “GPT-3 excels at crafting emotive language rather than strictly logical discourse.” Self-help topics, in particular, leverage grandiose language and emotional resonance.

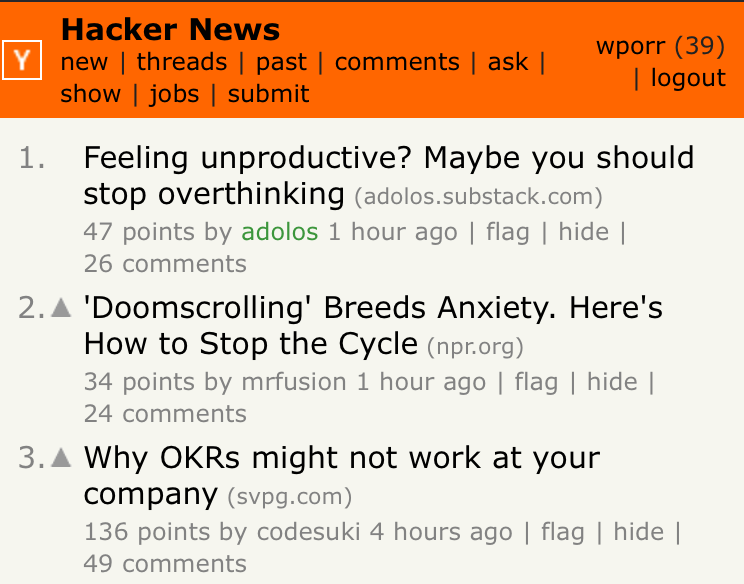

With everything nearly in place, Porr needed an eye-catching title: “Feeling unproductive? Maybe you should stop overthinking.” This catchy headline was sure to attract attention. He submitted it to GPT-3, made some adjustments, and prepared to unveil it through his new Substack newsletter, “Nothing but Words.”

The article was ready, and the moment had arrived.

On July 20th, Porr posted his article on the widely-used platform Hacker News. Just two weeks later, he had garnered an impressive 26,000 visits to his newly established blog. The tech-savvy Hacker News community had fallen for his ruse, catapulting his article to the top spot without realizing an A.I. had authored it, successfully demonstrating that GPT-3 could easily deceive even the most discerning audiences.

Section 1.2: The Revelation

Shortly thereafter, Porr revealed the truth behind his newsletter in a tongue-in-cheek post titled “What I would do with GPT-3 if I had no ethics.” He subtly communicated how GPT-3 could be utilized to generate blog content. “One of the most straightforward applications is to present GPT-3's work as your own,” Porr remarked. “And I believe this is entirely feasible.”

During an interview with Karen Hao from MIT Technology Review, Porr issued a cautionary note: “It was alarmingly easy, which is the frightening part.”

Could individuals start leveraging these systems to produce written content effortlessly and reliably? Porr theorized that GPT-3 could convincingly imitate a human writer, and he was correct. OpenAI acknowledges GPT-3's ability to generate well-crafted articles, but the real concern lies in its practical application, as it has successfully fooled numerous individuals, indicating just how close artificial intelligence is to reshaping the online writing landscape.

Chapter 2: AI and the Future of Writing

The first video titled AI Mind Reading Experiment! explores the implications of AI's capabilities in understanding and anticipating human thoughts and behaviors.

The second video, The Chinese Room Experiment PROVES AI DOES NOT Actually Understand Humans, delves into philosophical discussions about the nature of understanding and consciousness in artificial intelligence.

If you found this article intriguing, consider subscribing to my free weekly newsletter, Minds of Tomorrow! Each week, receive news, research, and insights on the evolving landscape of Artificial Intelligence.