Self-Driving Vehicles: The Intersection of AI, Philosophy, and Physics

Written on

Chapter 1: Understanding Self-Driving Cars

The concept of self-driving cars offers a unique perspective on the interplay between artificial intelligence (AI), philosophy, and physics. AI, in this context, is comprised of algorithms capable of executing tasks that typically require human intellect, such as visual recognition, speech processing, decision-making, and language translation. When we delve beneath the surface of autonomous vehicles, we discover a complex "philosophical brain" at work.

Autonomous cars are equipped with an extensive array of components dedicated to sensory perception, localization, signaling, and control. The challenge lies in how these intricate systems, which process an overwhelming amount of data, can make instantaneous decisions that may influence human lives.

Everything should be made as simple as possible, but no simpler — Albert Einstein

Section 1.1: The Role of Occam's Razor

To navigate this complexity, self-driving cars utilize a principle known as Occam's Razor. This philosophical concept, attributed to William of Ockham, posits that when presented with various hypotheses that lead to the same conclusion, one should favor the explanation that involves the least number of assumptions.

Self-driving vehicles employ sophisticated image processing techniques to identify and recognize objects around them. This aspect can be likened to the "eyes" of the car. However, before these autonomous systems can operate on public roads, their algorithms undergo rigorous training with extensive datasets. A critical challenge is to derive insights from this data without falling prey to over-fitting, which occurs when a model becomes overly tailored to the training data, impairing its ability to predict outcomes for unseen data.

Subsection 1.1.1: Addressing Over-Fitting

To mitigate over-fitting, dimensionality reduction techniques are employed. By eliminating inputs that contribute unnecessary noise or redundancy, self-driving algorithms enhance their predictive capabilities.

Section 1.2: Insights from Physics

Our understanding of the physical world also plays a crucial role in simplifying complex problems. In their research, Henry W. Lin, Max Tegmark, and David Rolnick highlight a significant correlation between our grasp of physics and the design of algorithms used in deep neural networks. They argue that while mathematical theories confirm that neural networks can tackle image classification tasks, the practical functions of interest can often be approximated with significantly fewer parameters than one might expect.

Chapter 2: Unraveling the Combinatorial Swindle

The vast array of mathematical functions far exceeds the potential networks designed to approximate them, yet deep neural networks consistently yield correct outcomes. This phenomenon, dubbed the "combinatorial swindle," suggests that our physical world provides essential clues for efficient learning.

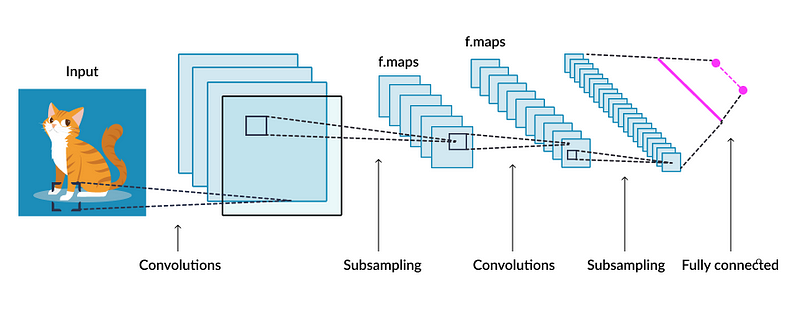

Patterns found in nature, such as symmetry and hierarchy, assist in managing the complexities of machine learning. For instance, convolutional neural networks leverage these principles to facilitate image classification.

By employing filters to examine images for localized patterns, convolutional neural networks generate layers of features, progressively refining their ability to classify images with a high degree of certainty.

Conclusion: The Philosophical Implications of AI

The evolution of our cognitive processes has led us to seek understanding in the universe's complexities. AI algorithms are a continuation of our quest to decode our surroundings. As we create these technologies, our biases and philosophical frameworks inevitably influence them. The pursuit of harmony amidst this dissonance is a fundamental aspect of our nature. Ironically, the concerns we harbor regarding AI merely reflect our collective conscience.