Enhancing Professionalism in the Agile Community through Science

Written on

A while back, I urged the Agile community to lean more on scientific findings instead of individual perspectives. My concern stems from a prevalent dependency on authoritative assertions, subjective experiences, hearsay, and optimistic thinking, which I believe contribute to the decline of Agile practices. Our field suffers when individuals make bold statements without solid evidence or offer solutions lacking validation. How can we trust that what we present to teams and clients isn’t mere deception? The threshold for what is accepted as “truth” in our profession is alarmingly low.

I’m not the only one voicing this concern. Influencers like Nico Thümler, Karen Eilers, Luxshan Ratnaravi, Takeo Imai, and Joseph Pelrine share similar sentiments. Since raising this issue, I have endeavored to integrate more scientific insights into our field through research initiatives and a series of blog posts summarizing scientific findings on various Agile-related topics. However, I have encountered unexpected resistance from within our community. Many have approached me, curious about how this shift may impact their work.

In this article, I will address the most frequent objections and inquiries regarding the integration of scientific research into our discipline and clarify what my appeal does not imply.

The Importance of Scientific Research to Me

The Agile profession is relatively young and dynamic, characterized by a strong idealistic ethos. A glance at LinkedIn or platforms like Serious Scrum reveals numerous deeply held convictions about what is considered right or wrong. Most of us are drawn to this field due to our strong beliefs in lofty goals such as “delivering value,” “cultivating humane workplaces,” and “facilitating empiricism.” We also hold various views on how to achieve these aims, often endorsing specific frameworks (like Scrum, Kanban, XP) while dismissing others (like SAFe).

This idealism extends to opinions on team composition (diverse versus homogeneous, stable versus fluid, technical versus non-technical), necessary roles, specific practices, and essential cultural characteristics within organizations. Consequently, each of us harbors beliefs about how teams and organizations should operate. Yet, the lively debates on social media reveal significant disagreement on these matters. Some assert that product managers and product owners are identical roles, while others oppose this notion. Some advocate for estimation, while others vehemently reject it. These questions are empirical and can be convincingly answered with adequate data, but this is seldom attempted amidst a barrage of opinions.

Scientific inquiry is essential to me because it represents the best systematic method we've devised for discerning truth in the natural world. When conducted correctly, it minimizes biases and closely approximates how the world functions. Ethically, I believe it is my duty as a professional to adopt practices that work and reject those that don’t. I also feel obligated to substantiate claims with evidence that corresponds to the strength of the claim. For example, if I assert that “All Scrum Masters need technical skills,” I must provide evidence from a substantial sample of Scrum Masters, both with and without technical skills, and link this to their teams’ effectiveness.

If such evidence is lacking or unknown to me, I should moderate my assertion to align with the evidence: “Based on my experience with X teams, I’ve noticed that Scrum Masters with technical skills seem to have more effective teams.” This is precisely where scientific research plays a crucial role. As the foremost method for determining truth, we should rely on scientific evidence to support our claims about best practices and guidelines. Such evidence can also help to challenge unsupported assertions.

The Role of Individual Experiences

Many Scrum Masters, Agile Coaches, and others share their personal experiences through blogs and social media. Does my appeal suggest that these experiences lack value in discerning what works?

Absolutely not. There is always a place for individual experiences. The scientific method is founded on the principle that our sensory experiences should serve as a primary source of knowledge. Our personal experiences with teams and organizations can shed light on how things might function. For instance, you might observe that larger teams tend to be less effective than smaller ones or that pair programming results in higher-quality code.

However, individual experiences are susceptible to numerous biases. Our brains tend to remember instances that affirm our beliefs while disregarding those that contradict them (confirmation bias). If I am convinced that all teams must be as stable as possible, I am more likely to recall instances where this was true. Additionally, selection bias may lead me to experience situations that reinforce my beliefs. For example, having developed my Agile expertise in smaller organizations, I might believe that Agile is more effective there than in larger settings, but this perspective is highly biased. More objective data may not support my view. An extreme case is the “N=1 bias,” where a single experience is generalized to encompass all scenarios. This is common in many “best practices” discussions within our industry, leading some to entirely dismiss estimation because it was ineffective in their context.

The essence of this is that individual experiences can highlight potential patterns. However, we cannot confidently generalize these patterns across different situations.

In summary, while our individual experiences can indeed inform us of potential patterns (e.g., “SAFe caused chaos in my organization” or “Pair programming enhanced our code quality”), such observations cannot be transformed into universal claims without more robust evidence. Generalization is only valid when we can aggregate numerous experiences from a representative sample and confirm that the pattern holds true there. When this occurs, we can assert our claims with confidence.

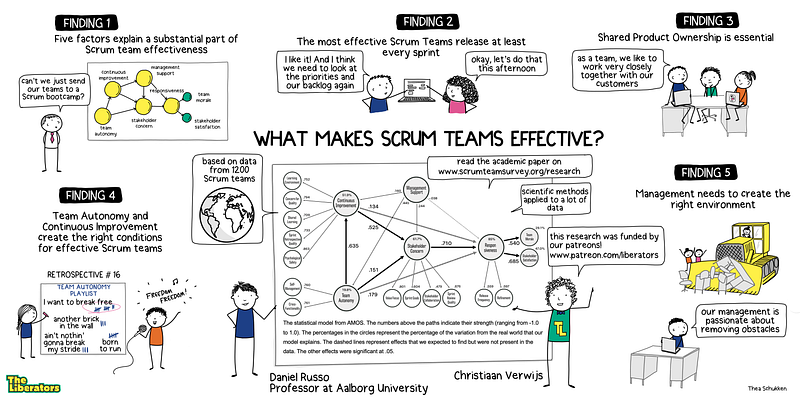

This is essentially what many scientific disciplines strive to achieve; they identify potential patterns from individual experiences (qualitative research) and test whether those can be generalized to a broader population (quantitative research). For instance, we can assert with significant confidence that teams learn more effectively in environments characterized by psychological safety (Edmondson, 1999). Similarly, we know that teams that release more frequently typically have more satisfied stakeholders (Verwijs & Russo, 2023).

Should We Rely Solely on Experimental Evidence?

Another argument posits that while people acknowledge the usefulness of scientific evidence, they set the bar so high that almost nothing qualifies. This means individuals can make various claims without grounding them in scientific evidence since collecting such evidence appears impossible.

One common assertion is that only evidence from double-blind, controlled experiments is acceptable. In these studies, researchers manipulate one variable while keeping everything else constant and measure specific outcomes. If the results change due to this manipulation, it can be confidently attributed to the modified variable. Ideally, neither the study participants nor the researchers know what is being tested, making it “double-blind.” While such studies provide a high degree of confidence, it is unreasonable to demand this level of rigor across all forms of scientific evidence and fields. In fact, it often isn’t even logical.

Experimental studies are feasible in scenarios where researchers can control all other variables, which is possible in some fields of the natural sciences like physics, chemistry, and medicine. However, experimental designs are often impractical or unethical in many other fields. A historian cannot alter past events to see their impact on the present. An astrophysicist cannot destroy one star to observe its effect on an identical star's planetary system. A developmental psychologist cannot raise the same child in two different environments to study the effects on development. Similarly, an organizational researcher cannot maintain complete consistency while implementing a certain methodology.

While experimental designs are powerful, they are not suitable for every research question or applicable in every context. The systems studied are often too complex to control all potential variables, especially in social sciences, climate science, and ecosystems, where myriad factors can influence outcomes (culture, personality, personal beliefs, events, etc.). Ethical considerations also prevent the use of experiments in certain scenarios. We cannot subject teams to extreme stress or psychological insecurity merely to observe their reactions. Instead, scientists gather evidence using various methodologies, including observational studies, theoretical models, case studies, meta-analyses, and more, to gain a comprehensive understanding of various phenomena. Each method has its strengths and limitations, and the choice of method is dictated by the nature of the research question and the available resources.

Rather than dismissing evidence from diverse methodologies (qualitative, quantitative, survey studies, correlational analyses, etc.), we should evaluate this evidence to gauge the validity of a claim. Academic communities frequently publish meta-analyses and literature reviews that assess the scientific evidence surrounding topics like psychological safety, effective leadership styles, team stability, and scaling frameworks. Such resources can be found on platforms like Google Scholar (using the “Only reviews” filter).

In summary, the argument that we should only accept evidence from experimental, double-blind studies is unreasonable. This would effectively invalidate most scientific evidence and undermine the scientific method across many fields. Instead, we should familiarize ourselves with the scientific consensus regarding relevant topics and use that to discern which claims are based on evidence and which are mere illusions.

Implications for Your Practice

With my appeal, I do not expect everyone to conduct scientific research themselves. While I commend community-driven research, conducting rigorous scientific studies is a specialized profession.

It can be instructive to observe how more established professions operate. For instance, psychologists have non-commercial organizations like the APA that educate, train, and license psychologists. They also fund research initiatives and keep their members informed about scientific advancements. Likewise, engineers have the IEEE; architects have the AIA; project management has the PMI. Such professional bodies exist to safeguard their fields. In our profession, the Agile Alliance is the closest equivalent to these organizations. Other bodies in our sector, such as Scrum.org, Scrum Alliance, and Scaled Agile Inc., are for-profit entities. While they may invest in research, their primary focus lies in commercial service provision rather than enhancing the profession.

“Since we don’t have strong non-profit institutions in our profession like the APA, IEEE, PMI, or AIA, the responsibility to protect our profession is more up to the professionals themselves.”

Given the absence of robust non-profit organizations like the APA, IEEE, PMI, or AIA to provide independent guidance, the onus for safeguarding our profession rests more heavily on us as professionals. Here are some actions you can take:

- Avoid making sweeping claims to clients or on social media without substantiating them with solid evidence. An assertive claim, such as “SAFe is the worst thing that has ever happened to Agile,” can be expressed as an opinion but should be clearly marked as such (“In my experience…”). Anything less is, in my view, unprofessional.

- To evaluate the strength of evidence for a claim, consult the scientific consensus on the matter. Google Scholar is a great resource for this. I recommend searching for recent “review articles” (from the last two decades). I have written extensively about this process. Academic papers can be challenging to read, but typically, the summary, introduction, and conclusion sections provide valuable insights. I am working to summarize the scientific consensus on several Agile-related topics, such as optimal team size, benefits of pair programming, the effectiveness of Scrum in smaller organizations, impacts of scaling on team performance, and effects of stress and motivation.

- You can contribute to scientific research by collaborating with academics like Daniel Russo, Nils Brede Moe, Maria Paasivaara, and Margaret-Anne Storey. They often seek participants or organizations for their studies.

- Politely challenge those making bold assertions on social media to provide their evidence. If their claims are founded solely on personal experience, hearsay, or business interests, we can set their statements aside until credible evidence is presented. By adopting this approach as a community, we can enhance the professionalism of our field.

Final Thoughts

In this article, I addressed some reactions to my call for our community to rely more on scientific inquiry rather than personal opinions. I aimed to emphasize the ongoing necessity for increased scientific research within our domain. Such an approach will enhance professionalism and allow us to deliver greater value to the teams and organizations we serve (while minimizing instances of misleading claims). Although this endeavor will be challenging and hindered by the lack of independent non-profit organizations to assist in this regard, we must collectively begin to move in this direction. I have outlined several actions you can take in your practice to support this effort.